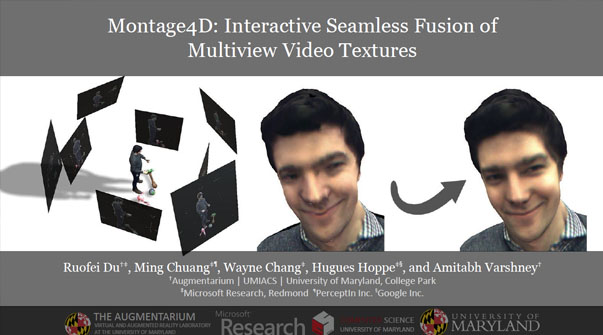

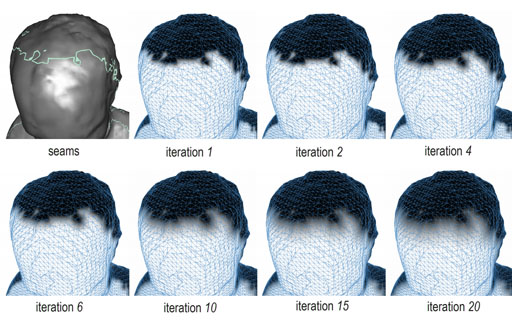

The commoditization of virtual and augmented reality devices and the availability of inexpensive consumer depth cameras have catalyzed a resurgence of interest in spatiotemporal performance capture. Recent systems like Fusion4D and Holoportation address several crucial problems in the real-time fusion of multiview depth maps into volumetric and deformable representations. Nonetheless, stitching multiview video textures onto dynamic meshes remains challenging due to imprecise geometries, occlusion seams, and critical time constraints. In this paper, we present a practical solution towards real-time seamless texture montage for dynamic multiview reconstruction. We build on the ideas of dilated depth discontinuities and majority voting from Holoportation to reduce ghosting effects when blending textures. In contrast to their approach, we determine the appropriate blend of textures per vertex using view-dependent rendering techniques, so as to avert fuzziness caused by the ubiquitous normal-weighted blending. By leveraging geodesics-guided diffusion and temporal texture fields, our algorithm mitigates spatial occlusion seams while preserving temporal consistency. Experiments demonstrate significant enhancement in rendering quality, especially in detailed regions such as faces. We envision a wide range of applications for Montage4D, including immersive telepresence for business, training, and live entertainment.

VIDEO

Publications Journal of Computer Graphics Techniques (JCGT), 2019.

Keywords: texture montage, 3d reconstruction, texture stitching, view-dependent rendering, discrete geodesics, projective texture mapping, differential geometry, temporal texture fields; digital human

Proceedings of ACM SIGGRAPH Symposium on Interactive 3D Graphics and Games (I3D), 2018.

Keywords: texture montage, 3d reconstruction, texture stitching, view-dependent rendering, discrete geodesics, projective texture mapping, differential geometry, temporal texture fields, digital human

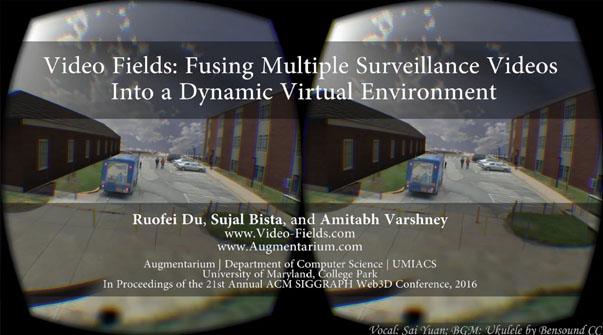

Proceedings of the 21st International Conference on Web3D Technology (Web3D), 2016.

Keywords: virtual reality; mixed-reality; video-based rendering; projection mapping; surveillance video; WebGL; WebVR; interactive graphics

Ruofei Du

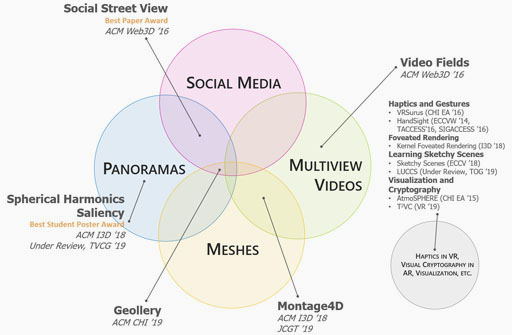

Ph.D. Dissertation, University of Maryland, College Park., 2018.

Keywords: social street view, geollery, spherical harmonics, 360 video, multiview video, montage4d, haptics, cryptography, metaverse, mirrored world

US Patent 10,504,274, 2019.

Keywords:

Cited By IBRNet: Learning Multi-View Image-Based Rendering 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Qianqian Wang , Zhicheng Wang , Kyle Genova , Pratul Srinivasan , Howard Zhou , Jonathan Barron , Ricardo Martin-Brualla , Noah Snavely , and Thomas Funkhouser . website , source | cite | search A Review of Video Surveillance Systems Journal of Visual Communication and Image Representation. Omar Elharrouss , Noor Almaadeed , and Somaya Al-Maadeed . source | cite | search An Inexpensive Upgradation of Legacy Cameras Using Software and Hardware Architecture for Monitoring and Tracking of Live Threats IEEE Access. Ume Habiba , Muhammad Awais , Milhan Khan , and Abdul Jaleel . source | cite | search Spatiotemporal Retrieval of Dynamic Video Object Trajectories in Geographical Scenes Transactions in GIS. Yujia Xie , Meizhen Wang , Xuejun Liu , Ziran Wang , Bo Mao , Feiyue Wang , and Xiaozhi Wang . source | cite | search A Multi-resolution Approach for Color Correction of Textured Meshes 2018 International Conference on 3D Vision (3DV). Mohammad Rouhani , Matthieu Fradet , and Caroline Baillard . source | cite | search Detection of Multicamera Pedestrian Trajectory Outliers in Geographic Scene Wireless Communications and Mobile Computing. Wei Wang , Yujia Xie , and Xiaozhi Wang . source | cite | search Neural Body: Implicit Neural Representations with Structured Latent Codes for Novel View Synthesis of Dynamic Humans CVPR 2021. Sida Peng , Yuanqing Zhang , Yinghao Xu , Qianqian Wang , Qing Shuai , Hujun Bao , and Xiaowei Zhou . source | cite | search Multi‐camera Video Synopsis of a Geographic Scene Based on Optimal Virtual Viewpoint Transactions in GIS. Yujia Xie , Meizhen Wang , Xuejun Liu , Xing Wang , Yiguang Wu , Feiyue Wang , and Xiaozhi Wang . source | cite | search GeoNeRF: Generalizing NeRF with Geometry Priors https://arxiv.org/pdf/2111.13539.pdf. Mohammad Mahdi Johari , Yann Lepoittevin , and François Fleuret . source | cite | search Light Field Neural Rendering https://arxiv.org/pdf/2112.09687.pdf. Mohammed Suhail , Carlos Esteves , Leonid Sigal , and Ameesh Makadia . source | cite | search A Self-occlusion Aware Lighting Model for Real-time Dynamic Reconstruction IEEE Transactions on Visualization and Computer Graphics. Chengwei Zheng , Wenbin Lin , and Feng Xu . source | cite | search Scalable Neural Indoor Scene Rendering ACM Transactions on Graphics. Xiuchao Wu , Jiamin Xu , Zihan Zhu , Hujun Bao , Qixing Huang , James Tompkin , and Weiwei Xu . source | cite | search Detection of Multicamera Pedestrian Trajectory Outliers in Geographic Scene Wireless Communications and Mobile Computing. Wei Wang , Yujia Xie , and Xiaozhi Wang . source | cite | search Multi-camera Video Synopsis of a Geographic Scene Based on Optimal Virtual Viewpoint Transactions in GIS. Yujia Xie , Meizhen Wang , Xuejun Liu , Xing Wang , Yiguang Wu , Feiyue Wang , and Xiaozhi Wang . source | cite | search Progressive Multi-scale Light Field Networks arXiv.2208.06710. David Li and Amitabh Varshney . source | cite | search Efficient 3D Reconstruction, Streaming and Visualization of Static and Dynamic Scene Parts for Multi-client Live-telepresence in Large-scale Environments arXiv.2211.14310. Leif Van Holland , Patrick Stotko , Stefan Krumpen , Reinhard Klein , and Michael Weinmann . source | cite | search Multi-Level Clustering Algorithm for Pedestrian Trajectory Flow Considering Multi-Camera Information 2022 2nd International Conference on Computer Science, Electronic Information Engineering and Intelligent Control Technology (CEI). Wei Wang and Yujia Xie . source | cite | search EditableNeRF: Editing Topologically Varying Neural Radiance Fields by Key Points arXiv.2212.04247. Chengwei Zheng , Wenbin Lin , and Feng Xu . source | cite | search Video\textemdashGeographic Scene Fusion Expression Based on Eye Movement Data 2021 IEEE 7th International Conference on Virtual Reality (ICVR). Xiaozhi Wang , Yujia Xie , and Xing Wang . source | cite | search Multi-Camera Light Field Capture : Synchronization, Calibration, Depth Uncertainty, and System Design 6. Elijs Dima . source | cite | search MonoMR: Synthesizing Pseudo-2.5D Mixed Reality Content From Monocular Videos Applied Sciences. Dong-Hyun Hwang and Hideki Koike . source | cite | search Feature Based Object Tracking: A Probabilistic Approach Florida Institute of Technology. Kaleb Smith . source | cite | search Reconstruction and Detection of Occluded Portions of 3D Human Body Model Using Depth Data From Single Viewpoint U.S. Patent 10,818,078. Jie Ni and Mohammad Gharavi-Alkhansari . source | cite | search Heterogeneous Data Fusion U.S. Patent 11,068,756. James Browning . source | cite | search Video Display Method and Device CN110996087B. Feihu Luo . source | cite | search Image Processing Module, Image Processing Method, Camera Assembly and Mobile Terminal CN112291479A. Jingyang Chang . source | cite | search Video Content Representation to Support the Hyper-reality Experience in Virtual Reality 2021 IEEE Virtual Reality and 3D User Interfaces (VR). Hyerim Park and Woontack Woo . source | cite | search Multi-View Neural Human Rendering 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Minye Wu , Yuehao Wang , Qiang Hu , and Jingyi Yu . source | cite | search Spatiotemporal Texture Reconstruction for Dynamic Objects Using a Single RGB-D Camera Computer Graphics Forum. Hyomin Kim , Jungeon Kim , Hyeonseo Nam , Jaesik Park , and Seungyong Lee . source | cite | search RealityCheck: Blending Virtual Environments with Situated Physical Reality Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems. Jeremy Hartmann , Christian Holz , Eyal Ofek , and Andrew Wilson . source | cite | search High-Precision 5DoF Tracking and Visualization of Catheter Placement in EVD of the Brain Using AR ACM Transactions on Computing for Healthcare. Xuetong Sun , Sarah B. Murthi , Gary Schwartzbauer , and Amitabh Varshney . source | cite | search Volumetric Capture of Humans with a Single RGBD Camera Via Semi-Parametric Learning 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Rohit Pandey , Cem Keskin , Shahram Izadi , Sean Fanello , Anastasia Tkach , Shuoran Yang , Pavel Pidlypenskyi , Jonathan Taylor , Ricardo Martin-Brualla , Andrea Tagliasacchi , George Papandreou , and Philip Davidson . source | cite | search SIGNET: Efficient Neural Representation for Light Fields 2021 IEEE/CVF International Conference on Computer Vision (ICCV). Brandon Feng and Amitabh Varshney . source | cite | search The Relightables: Volumetric Performance Capture of Humans with Realistic Relighting ACM Transactions on Graphics. Kaiwen Guo , Peter Lincoln , Philip Davidson , Jay Busch , Xueming Yu , Matt Whalen , Geoff Harvey , Sergio Orts-Escolano , Rohit Pandey , Jason Dourgarian , Danhang Tang , Anastasia Tkach , Adarsh Kowdle , Emily Cooper , Mingsong Dou , Sean Fanello , Graham Fyffe , Christoph Rhemann , Jonathan Taylor , Paul Debevec , and Shahram Izadi . source | cite | search Instant Panoramic Texture Mapping with Semantic Object Matching for Large-Scale Urban Scene Reproduction IEEE Transactions on Visualization and Computer Graphics. Jinwoo Park , Ik-Beom Jeon , Sung-Eui Yoon , and Woontack Woo . source | cite | search LookinGood: Enhancing Performance Capture with Real-time Neural Re-Rendering ACM Transactions on Graphics. Ricardo Martin-Brualla , Rohit Pandey , Shuoran Yang , Pavel Pidlypenskyi , Jonathan Taylor , Julien Valentin , Sameh Khamis , Philip Davidson , Anastasia Tkach , Peter Lincoln , Adarsh Kowdle , Christoph Rhemann , Dan B Goldman , Cem Keskin , Steve Seitz , Shahram Izadi , and Sean Fanello . source | cite | search Image-guided Neural Object Rendering 8th International Conference on Learning Representations. Justus Thies , Michael Zollhöfer , Christian Theobalt , Marc Stamminger , and Matthias Nießner . source | cite | search MetaStream: Live Volumetric Content Capture, Creation, Delivery, and Rendering in Real Time Proceedings of the 29th Annual International Conference on Mobile Computing and Networking. Yongjie Guan , Xueyu Hou , Nan Wu , Bo Han , and Tao Han . source | cite | search Local Implicit Ray Function for Generalizable Radiance Field Representation arXiv.2304.12746. Xin Huang , Qi Zhang , Ying Feng , Xiaoyu Li , Xuan Wang , and Qing Wang . source | cite | search Adaptive Lighting Modeling in 3D Reconstruction with Illumination Properties Recovery 2023 9th International Conference on Virtual Reality (ICVR). Ge Guo and Lianghua He . source | cite | search Dynamic Surface Capture for Human Performance by Fusion of Silhouette and Multi-view Stereo The 18th ACM SIGGRAPH International Conference on Virtual-Reality Continuum and its Applications in Industry. Zheng Zhang , You Li , Xiangrong Zeng , Sheng Tan , and Changhua Jiang . source | cite | search Enabling Artificial Intelligence Analytics on the Edge London South Bank University. Vasilis Tsakanikas . source | cite | search NeVRF: Neural Video-based Radiance Fields for Long-duration Sequences arXiv.2312.05855. Minye Wu and Tinne Tuytelaars . source | cite | search Enriching Telepresence with Semantic-driven Holographic Communication Proceedings of the 22nd ACM Workshop on Hot Topics in Networks. Ruizhi Cheng , Kaiyan Liu , Nan Wu , and Bo Han . source | cite | search DARF: Depth-Aware Generalizable Neural Radiance Field Displays. Yue Shi , Dingyi Rong , Chang Chen , Chaofan Ma , Bingbing Ni , and Wenjun Zhang . source | cite | search